AI in the Hands of Fraudsters: How to Protect Digital Products from New-Generation Attacks

Artificial intelligence (AI) has become an inseparable part of digital product development, but as the technology evolves, so do the ways it is abused. Stripe's newly published report, The 2025 State of AI and Fraud, paints a stark picture of this arms race, where both fraudsters and defense systems are using AI. Based on an analysis of thousands of business leaders and billions of transactions, the report asserts that we have entered a new era in the fight against fraud. The question is no longer whether a business uses AI for protection, but whether its technology can compete with increasingly industrialized, AI-driven attacks.

The report identifies two critical threats that are drastically reshaping the risk landscape. One is the staggering scale of card testing attacks, where criminals use AI to automate the validation of stolen card data, sometimes launching thousands of transactions against a single business daily. The other is the perfection of fake identities; generative AI now creates such convincing fake profiles and documents that they can slip through traditional Know Your Customer (KYC) systems. According to Stripe's survey, 30% of business leaders already believe that AI is exacerbating problems related to fake account creation and merchant fraud. Together, these two trends present a challenge to digital platforms and marketplaces where traditional, rule-based defenses are proving insufficient.

In Europe's specific regulatory and economic environment, the phenomenon not only reflects global patterns but also creates unique challenges due to the specificities of the local market and proactive EU legislation. The shift in criminal methodology toward psychologically manipulative social engineering and the use of synthetic identities is becoming more pronounced in Europe as well, fundamentally rewriting the trust and technological requirements for digital products.

Card-Not-Present Frauds and Synthetic Identities

With the growth of online commerce, Card-Not-Present (CNP) fraud has become the main threat in Europe. According to the FICO 2024 European Fraud Map, losses from card fraud are continuously increasing, with the CNP segment being the primary driver. Criminal methodology has clearly shifted from traditional identity theft toward psychological manipulation-based social engineering and phishing attacks. According to the 2025 report from ENISA (the European Union Agency for Cybersecurity), phishing is the most common intrusion method, accounting for 60% of all cases. AI-based phishing campaigns now represent more than 80% of all social engineering activities worldwide.

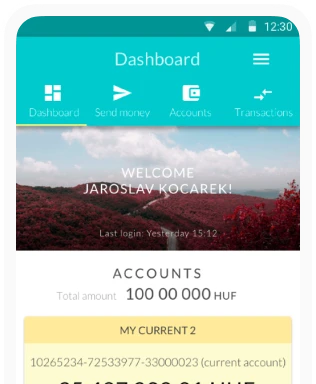

In synthetic identity fraud, criminals combine real, stolen data (e.g., a tax identification number) with fabricated information, creating a seemingly legitimate yet completely fictitious person. This is not just a financial problem, but a deep UX and trust issue, as these attacks question the very foundations of the digital ecosystem. In parallel, fraudsters use AI to build huge databases of stolen card data and then use automated tools to initiate thousands of transactions daily (card testing) to validate the cards.

The Solution: Intelligent and Collaborative Defense

The good news is that the most effective response to AI-based attacks also lies in AI. According to the Stripe report, 47% of companies are already using AI to detect and prevent fraud. The secret to effective defense, however, is not just AI itself, but the development of network-level, collaborative models.

1. Network data-driven defense

The age of isolated, in-house defense systems is over. The most effective solutions learn from the collective knowledge of a vast network. Two examples from Stripe’s report:

- FreshBooks, using Stripe's network model, linked merchant risk with real-time transaction monitoring, and in doing so, prevented the registration of more than 300 fraudulent accounts in just three months.

- DoorDash incorporated the risk scores from Stripe's network into its own models, which reduced its chargeback costs by 10%.

2. Collaborative architectures and regulatory tailwinds

The next step beyond network logic is privacy-conscious collaboration between companies. The EU's new Payment Services Regulation (PSR) explicitly encourages the sharing of fraud-related data among financial service providers. Two promising models exist for this:

- Consortium models: Members share anonymized data in a common database to identify cross-institutional fraud patterns.

- Federated learning: A decentralized machine learning technique allows a shared, highly accurate AI model to be trained without participants having to share their raw, sensitive customer data. According to research from Lucinity, this method enables collaborative learning without violating data protection regulations.

3. Behavior-based identification – the new frontline of defense

As static data (passwords, personal information) becomes increasingly easy to compromise, the focus of defense is shifting toward analyzing users’ dynamic behavior. Behavioral biometrics monitors unique patterns such as typing rhythm, mouse movement, or the angle at which a phone is held. This technology operates frictionlessly in the background and can detect account takeover attempts or even whether a legitimate user is being instructed by a fraudster over the phone.

The UX Challenge: Balancing Security and a Seamless Experience

Reinforced protection inevitably brings with it the phenomenon of security friction, i.e., the additional burdens placed on the user journey by security steps. This friction is a primary enemy of conversion, especially during the critical onboarding phase, where overly lengthy or complicated identification procedures are a major cause of user abandonment.

In Europe, the problem culminated with the introduction of Strong Customer Authentication (SCA) mandated by PSD2 (Payment Services Directive 2, an EU law that regulates payment services to improve security and promote competition). Although the intention – to increase security – is correct, poorly implemented SCA flows often lead to high rates of cart abandonment. The solution is not to reduce security, but to manage it intelligently. Strategies such as applying exemptions based on Transaction Risk Analysis (TRA) for low-risk payments, or using the modern EMV 3DS 2.0 protocol, enable a frictionless flow for authentication in the background, requiring active user intervention only in genuinely suspicious cases.

The most harmful event, however, is a false positive (or false decline), when the system incorrectly blocks a legitimate transaction. Its business impact far exceeds the damage caused by actual fraud. While the estimated value of global e-commerce card fraud was $48bn, the lost revenue due to false declines reached $443bn; 41% of customers will never return to a brand where their payment was baselessly rejected, and 32% will voice their frustration on social media. Since no AI system is perfect, the UX design for handling false positive cases is critically important. The goal is to turn a negative experience into a trust-building interaction.

To this end, the following empathy-based design principles should be applied:

- Empathetic communication: Instead of a cold "Transaction declined" message, helpful wording that refers to the user's security ("For your security, we need to verify this payment") reduces frustration and the feeling of being suspected.

- Immediate, in-context solution: Instead of sending the customer to a dead end with a customer service phone number, an immediate, simple secondary authentication option should be provided on the interface (e.g., biometric or SMS code confirmation), allowing them to complete the transaction without interruption.

- Learning from mistakes: The system must learn from transactions approved by the user that were incorrectly flagged. Placing the trusted customer on a whitelist can prevent them from having a similarly frustrating experience in the future, thereby continuously improving the model's accuracy.

The Strategy for the Age of Network Intelligence

The 2025 landscape makes it clear: the era of isolated, in-house defense systems is over. Sophisticated, AI-driven fraud can only be effectively combated with collective, network-level intelligence. In the future, with the spread of AI agents, the speed and complexity of attacks will continue to grow. For businesses developing digital products, the key to survival will be the development of adaptive, intelligent, and collaborative defense strategies.

For business leaders, this realization creates both a strategic imperative and an opportunity. The European regulatory environment – with the tightening of phishing fraud regulations in PSD3/PSR, the digital resilience requirements of DORA, and the ethical and transparency expectations of the AI Act – collectively points the way toward more advanced, resilient systems. The PSR's mandate for data sharing, for example, is no longer just an option, but a clear legislative intent to strengthen sector-level protection. The question, therefore, is not whether to participate in collaborative models like industry consortia or federated learning systems, but how to integrate them most effectively into our own strategy. Beyond mere compliance, aapplication of ethical, transparent, and privacy-respecting AI has become a clear competitive advantage and a cornerstone of brand trust.

For product development and UX teams, this paradigm shift entails concrete design tasks. The most resilient architecture is a layered, hybrid model that combines network intelligence (consortium data) with real-time, passive protection (behavioral biometrics). Equally important is the conscious design of the security measures UX. The goal is to minimize "security friction" and handle false positives empathetically. Instead of a declined transaction being a frustrating dead end, a well-designed process allows the customer to immediately approve the operation with a simple, contextual secondary authentication. Security should not be presented as a necessary evil, but as a valuable, trust-building service. A seamless biometric login or a fast, AI-based registration are positive brand interactions that send the message: We care about you and your data.