Creating Voice User Interfaces is shaping to be like a walk in the park

English has become a common language of modern times in the Northwest of the world, and this greatly facilitates communication on a daily basis. But half a billion people in Europe rightly ask why they should learn a second language just to be able to handle devices with voice commands?

Ergomania is a leading expert in Europe on VUI, ie Voice User Interfaces. Voice-based UI has long been part of everyday life. It is often unnoticed, we use it without even thinking about it, we are just communicating with a system through a VUI, but the coronavirus epidemic and the increase in hygiene expectations clearly show that there is room for improvement in this area as well.

There is a high demand for VUI, but it is spreading slowly

Although there are significant technological differences between (or even within) different countries, purely voice-based system management has only been partially implemented in most places.

Let’s take using public transportation as an example. The doors of he vehicles are opened either by the driver/the system or by the passengers via pushing a button set in the doorframe or the door itself. If you want to avoid touching he same surface thousands of fellow travellers touched before you, you need to wear gloves, or sanitize your hands right after getting off (which itself, is something you ought to do).

Although there is a great demand for VUIs, we come across a similar situation with information screens available for the public or in shopping malls, vending machines, electronic information desks, etc. where VUIs are only sporadically installed.

VUIs are found in more and more places

Voice-based interfaces can be found as user interfaces in phones, televisions, smart homes and many other products. With the development of voice recognition and smart home technology, the proportion and number of voice interactions is expected to increase.

Sometimes VUIs are optional add-ons to otherwise graphical user interfaces – for example, when it is being used to search for movie titles on a smart TV.

Other times, VUI is the primary or only way to interact with a product, such as smart home speakers like Amazon Echo Dot or Google Home, but there are also ways to start purely voice-based game development.

Touching the screen is increasingly being replaced by voice-based use

At least in some cases. There has been a significant shift towards voice-based device use over the past decade for a number of reasons. It is usually observed that people get stuck after a while from “using a screen” and usually start reducing their use of devices. Even the digital welfare features that are part of most operating systems play into this. According to Fintech UX experts, the era of „voice first” has come, as 2010 was the beginning of the era of „primarily mobile”.

However, other aspects also contribute: 30% of people are either functionally illiterate or younger than they could have started learning to read. Also, 2% of the population is blind or partially sighted, and the number of dyslexics is also measurably high.

Thus, we can say that even in developed societies, one-third of the population either cannot use traditional interfaces effectively at all, or with difficulty and by being constantly challenged. Add to that the cases where even a literate, adult, healthy person encounters different hurdles when handling graphical surfaces (their hands are full, there is an epidemic raging on, they wear gloves, they are obstructed, etc.) and we already see that the use of VUIs can provide a huge advantage for most products with UIs.

Voice assistance has been introduced to the market partly to support people with disabilities, partly to overcome the innovation system and partly to combat „screen fatigue”, but they soon became one of the preferred options for quick retrieval of information.

According to statistics, in 2020, 50% of searches were voice-based, and 31% of mobile owners use voice-based search at least once a week. More than half of people reported that they prefer to query about the weather or search for a specific product using voice commands.

Oh, the irony: language is the biggest hurdle for VUIs

Yet the VUI is not spreading as fast as you might have expected. In fact, developers achieve quite good results in the most common world languages, but for most people, English or Chinese are not their mother tongue.

Although English has become a common language, Brexit has brought upon a rather strange situation in the European Union: it is true that the EU recognizes 24 official languages, but the common language will only be used by a few million EU-citizens, namely the Irish, as their mother tongue.

Everyone else speaks well-bad as a learned language – if they master it at all. When barely 1% of half a billion people speak a language and the EU recognizes 24 languages as official, the question rightly arises for the average Hungarian, Italian, German, French or Swedish user as to why English should be forced?

The answer is that you will then have to design with systems that understand all 24 official languages equally well. Efforts have been made in the past, such as Lernout & Hauspie Speech Products (Lernout & Hauspie) in Flanders, who have acquired one of the basic text recognition software, Dragon NaturallySpeaking.

The torch was then carried on by an American firm, Nuance Communications, which is at the forefront of developing voice-based technologies and, for example, Apple’s partner in the development of Siri.

Creating a VUI is relatively easy

Today, the technology and available equipment have reached the point where we can say that creating a VUI in English (or in another single language) is a routine task for Ergomania. Both Amazon Alexa and Google Assistant provide a relatively easy way to create your own unique VUI.

Since natural language analysis, a more effective understanding of grammar, and a new approach to human speech and languages in general have become the basis of speech recognition, the development of VUIs has also become much more effective.

By expanding our UX design with VUI, voice-based interaction can make it easier for users to operate with existing graphical interfaces, or we can even design our own applications for products that use only sound interactions.

How to create your own VUI?

Voice-based user interfaces are significantly different from graphical user interfaces, so much so that we often can’t even apply the same design guidelines.

In the case of a pure VUI, for example, we cannot create visible benefits. Consequently, users will not have a clear indication of what they can do with the interface or what options they have.

Users are also often unsure of what to expect from voice-based interaction because meaningful human speech is usually associated with communicating with other people rather than technology.

The design of the VUI posed challenges

To some extent, users want to communicate with voice interfaces like other people. Because speech is so fundamental to human communication, we cannot ignore the expectations of users or how speech communication between two people normally takes place, even if we are fully aware that we are talking to a device rather than a person. .

So to understand users ’expectations of VUI, we need to understand the principles that govern human communication.

What customers expect in connection with a VUI?

In the case of live speech, we can also observe two important, unconscious phenomena that lay people involuntarily expect from voice-based interfaces.

The first is metacommunication, i.e. body language, accounting for a significant portion of actual live communication (which is out of the question in the case of a purely voice-based UI), and the second is that when people talk, a lot of information is not communicated through spoken message.

Using our knowledge of context, we can create a common meaning while listening and talking. In addition, the transformation of sound recognition properties (information) into data – tone, pitch, pause between sounds – is just as essential information as individual sounds.

The speed of data processing is “much slower” for a VUI than for a GUI

Although “much” may seem like a strong exaggeration according to human time concepts, in the case of computers, there is a significant time difference between executing a command (text / iconic) issued in a graphical interface and executing a voice command.

On the one hand, the user himself often formulates the command more slowly, as if tapping an icon or typing a command line, especially at first, when he has to learn separately what the system can understand.

NLP (Natural Language Processing) is at our service because it significantly speeds up the process by allowing computer interpretation of the spoken text.

On the other hand, voice recognition itself is a “long-term” process, at least for one computer: you must first record sentences / voice commands and then process the recorded data, including contextual analysis.

Processing data obtained by voice requires computing capacity that is still faster to perform on a remote server dedicated to this task than on a manual device – which in turn further slows down the execution of voice commands. But there are even more challenges.

It is almost impossible to imitate a person perfectly

Take an example: someone wants to order a coffee.

He goes to the barista and says:

– Good afternoon, I’d like a coffee to go. With milk, sugar, put everything in it.

The person behind the counter is either a seasoned pro and knows what the customer is thinking or asks for clarification.

– What milk do you need and how much sugar do you ask for? Brown, white?

Also, for a human barista, “put everything in it” won’t mean you need them putting all the sugar found in the café. If the customer were to talk to a digital system, a literal interpretation of “put everything in it” would create huge problems.

People expect to be understood when they express themselves, as in this example. Thus we, the designers, need to pay attention to the context – the barista not only understands the term “put everything in it” but interprets it depending on the context, i.e. it does not want to add all the available flavors and condiments to the coffee, only the milk and sugar as the customer requested.

The “danger zone” we enter during the development of the VUI is this: from the point of view of voice recognition technology, it is almost impossible to grasp all the necessary contextual factors and assumptions during the brief exchange of information.

VUIs, even if artificial intelligence (actually machine learning and algorithms) is being implemented it into the system, still start with a huge disadvantage: people acquire contextual comprehension through decades of continuous social interaction, in addition, the majority also had vision, therefore, in addition to sound, meta-communication is also associated.

Legal and technological challenges in Europe: GDPR and the VUI

Although the primary goal of the GDPR, i.e. the General Data Protection Regulation, is to protect the data of users as data owners and increase their opportunities for dominance over their data assets, there have been serious concerns in many areas not only from companies but also from designers and developers.

Speech also contains data, and in many cases explicitly protected data (religious affiliation, sexual orientation, health status, etc.), or simply confidential information that people do not want to divulge to others for good reason. Just a few examples: when they leave home for a longer period of time, what is their exact address, how much they earn, who they wire money to, and so on.

If someone manages their bank accounts via graphical interface, say through the mobile banking app, it is much easier to maintain a private sphere there, even in crowds, than when issuing voice commands.

So when we plan a VUI, we do our best at Ergomania to protect user data in accordance with the relevant provisions of the GDPR.

How to create a VUI for mobile and other devices?

As long as technology does not evolve to such a level that VUIs are are able to accommodate idiomatic expressions, we must show our users to how they can use the VUI.

If we can, it is definitely worth designing a hybrid UI with graphical help, because it ensures optimal operation.

Let’s face it: users often have unrealistic expectations about how they can communicate with a voice-based user interface. All this is related to the problem that by the end of the 2010s, developments and their launch took place at an unprecedented rate.

While a new technology has lasted for decades from the 70s (think VHS videotape, floppy disk, classic mobile phone, desktop computer, and so on), today’s tech is advancing in such a rapid pace that decades have shrunk into years (if that).

The world happily used VHS-tapes for achieving home videos and movies for at least twenty, DVDs for ten years. HD-DVD and BlueRay will be with us for a few years more, but nowadays users either store data on a flash/portable drives, or in the cloud and stream it when needed. Just think about how weird these days is to have a laptop with a DVD reader.

Devices that use a VUI are starting to become more and more popular these days, and it’s understandable that many people don’t know what to do with a voice-based digital assistant. They can even design a voice-based game with it, as Ergomania did.

Now let’s go into the details and see how we can create a voice-based UI for mobile and other devices with a unique design and development. There are a number of tools available for this, and we have now come to the point where the most difficult part of the work has been accomplished by others: the recognition of human voice and the framework for contextual text interpretation.

How to make a working VUI prototype?

If we look at user reviews of Amazon’s digital assistant, Echo Dot, it becomes clear that some users have built a strong emotional connection to the device as if it wasn’t an inanimate object, but a pet.

As it is almost impossible for the VUI designers and makers to meet the users’ expectations of the device/interface being on par with a natural conversational partner, it becomes even more important to design a VUI to contain the right amount of information and get an elegant, lovable and user-friendly design.

The following guidelines have been compiled by Ergomania experts based on what we experienced and learned by using Amazon Alexa. They serve as a kind of starting point for how to design a working VUI, taking advantage of Alexa’s voice communication skills.

Applying text to sound technology

Amazon Echo Dot Digital Assistant uses the Alexa voice-based user interface as the primary form of interaction, while Google Home uses Google Assistant.

It is true for both systems that they are able to turn text into sound. For example, when looking for a product using a Google search engine, it is almost impossible to find any webshop with audio files next to the products, listing their properties so that the digital assistant only has to play the audio file.

Give users information on what they can do with the VUI

As long as you can clearly show users what options to choose from in a graphical user interface, a voice-based UI is unable to show what choices are available, and new users base their expectations on their experiences during conversations.

Therefore, they can start asking for something that makes no sense to the system or that is not possible. Thus it is well worth to clearly state the interaction options the users can use.

For example, if you can order a taxi through the VUI, the system may inform the user that “you can choose from official taxi companies or Uber or Bolt drivers”.

It is also a good idea to give users a simple exit from any feature, such as the “exit” voice command.

Help users to navigate

When we use a GUI, we almost always know where we are within that system, in which submenu we are getting our information from and where we are going from there. However, in case of a VUI, the user can easily get confused or activate something by mistake.

Think of the user as if they were blind, entering an unknown room – and clearly inform them of the possibilities, and the answers should be complete sentences.

For example:

– What is the the weather forecast for Rome?

– In Rome, the weather forecast the next week is mostly sunny, with an average temperature above twenty degrees Celsius.

Ask the user to speak clearly

You can avoid a lot of misunderstandings and frustrations by informing users in advance how to communicate with the VUI. Feel free to tell them that your system only understands simple, everyday wording, and whole sentences while using slang in truncated sentences won’t work.

All of this is worth illustrating with practical examples to make it even clearer. For example, if you are curious about the daily news, good practice dictates a query like this:

“Alexa, what are the latest news on Telegraph?

While the system would most definitely struggle with this:

“Yo, ’Lexa, my gal, what’s the deal-o on Torygraph?”

Limit the amount of information

When users browse visual content or lists, they can return to information they have already forgotten. This is not the case with voice content, because here you need to keep every sentence short so that the user does not mix or forget the items in the list.

Amazon recommends that you rarely give more than three options for an interaction. If you have a longer list, you better group the options and start by providing the most popular ones for users.

Indicate your system to be active

When we are called by phone or via a chat application, the device itself indicates the incoming call by default, and on the other hand, the caller almost certainly says something like ”hallo” so both the device and the caller indicated that they are open to voice-based interaction.

For VUI, too, it is very important for the device to let the user know that it is active, watching, and waiting for voice commands. Amazon Alexa, for example, shows that it is active with a bluish light signal, while at the top of Google Home, the four leds indicate their current status with color combinations and brightness.

Use prototyping tools

Ergomania experts use several devices of different strengths when designing a VUI prototype.

Dialogflow – especially recommended for projects based on Google Assistant as it is based on Google’s framework. The latest version is Dialogflow CX, which provides clear and unambiguous control over the conversation, providing a better user experience and a better development workflow. It has also been given a new visual construction system and it has become even easier to manage (complex) conversation processes.

Storyline – a great tool for creating a prototype of Alexa Skill and developing a skill without coding, plus we can upload the prototype to our own Alexa device. It is also planned to be compatible with Google Assistant.

Botsociety – if you are planning a chatbot in addition to a prototype for voice-based digital assistants, or are usually looking for a great process design application, Botsociety is the winning choice.

Paper and Pen – It’s often easiest to grab a pen and a piece of paper (or a digital version of them) and manually start outlining the process, dialogs, options, branches. Keep in mind that the designers also sketch the first designs of luxury cars on paper before they sit in front of a machine!

Why choose Ergomania to design your VUI prototype?

Ergomania has designed several voice-based UIs since its foundation. As Hungarian experts in UX / UI design, we have outstanding professional experience in the prototyping of VUIs and the design of sound-based interfaces.

We also have significant experience in creating voice-based interfaces that “speak” in European languages not supported by Google Home or Amazon Alexa.

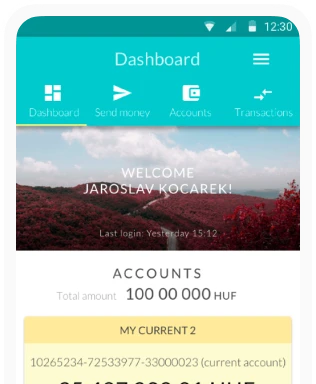

In this case, we use other tools to create the VUI prototype, such as talkabot.net with Google voice api. When we made a VUI that allows you to send money to a language not supported by Amazon and Google’s digital assistants from almost nothing, we expected people to communicate with telephone customer service staff in a simple, well-processed way and this would be an excellent pattern for sound planning. Instead, we found that users kept on talking, and what made our work even harder was that it was almost impossible to find patterns that could be typed.

We bridged this by sending a series of questions to a wide range of potential users regarding the texts, sentences, dialogue panels they most often hear and use. We got enough answers so we could start teaching the system.

Using the Google Text-to-Speech API, we have achieved such good results that the next step will be to implement a money-sending application that has limited functionality but can be used in real conditions and can be managed through VUI.

Of course, this is just an easy example, but it still highlights how well-used applications we now have for prototyping VUIs.

And if you want to get a really professional, VUI, contact Ergomania experts.