What is a Voice User Interface and why is it really needed?

Voice User Interfaces are present in many areas of life. Sometimes they are especially useful, for instance when contactless use is paramount: during an epidemic, in a public area, or at any time in an isolation ward of a hospital.

In other cases, they can be quite annoying, especially if we have to cope with a poorly designed and/or incorrectly conceived interface. Is there anyone out there who has never been upset by a telephone customer service system?

Naturally, it is not the VUI itself that is flawed, rather the faulty design and the badly structured workflow. Moreover, these types of systems are only VUI-ish, since the users do not issue voice commands, neither ask any questions. The system offers instructions and choices.

When we already know (in vain, but still) which button to press to get to the section in the menu we wanted, but we still have to listen to the monotonous, robotic sounding text. No wonder that most people would sooner or later lose their patience. In this case, the user is never at fault! Come on, who likes having their time wasted by others?

That is why Ergomania always holds the user’s needs as a priority when designing and creating a UI (user interface). In this article, we are exploring the design basics of the Voice User Interfaces also show that you can develop a lovable, optimally functioning UI.

Definition of VUI (Voice User Interface)

A Voice User Interface (VUI) is an interface that allows you to control a system or software in whole or in parts using voice commands.

These VUI-systems have been with us for quite some time. Think of telephone customer service management systems, where you have to choose from the menu items at the touch of a button, but users are presented with choices by a voice in call.

The main problem of VUI: vision is our dominant sensory organ

Given that man is fundamentally a visual being, a pure Voice User Interface has several disadvantages. The brain perceives the world primarily on the basis of visual stimuli, i.e., information received through vision. Just think about it: how far is the horizon visible in a clear weather if there is nothing to hinder the view? More than ten kilometers.

But what is the hearing distance of man? A few kilometers. Smell? A few meters. And taste and touch work only from direct input.

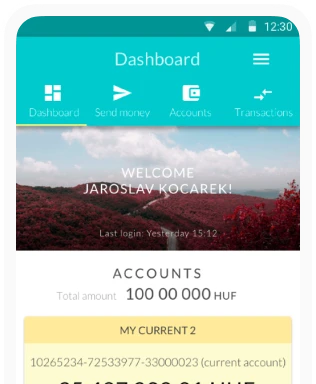

In the case of VUIs this disadvantage is mitigated by hybrid solutions providing visual support – such as a textual description of the possibilities offered by the Voice User Interface or the individual steps of the processes. Perhaps the best example of such a hybrid system is Erica, a virtual financial assistant at Bank of America, that (who?) – in addition – is also available as a mobile application, so customers can feel they can take the virtual assistant with them anywhere.

So, let’s first look at the basics of designing Voice User Interfaces.

Basics of VUI design

For Voice User Interfaces, we typically come across with two approaches.

The first, rather older view, is that VUI is a kind of ancillary, supplementary service, a kind of necessary evil, and is mainly created as a voice-based version of graphic UIs.

The earliest example of this is IVR systems: Interactive Voice Response (IVR), an interactive response system known to anyone who has ever used a customer service VUI. “To speak with a representative, please press zero… Hold the line until our representative connects to you.” Well-known answers that may appear in any IVR.

In the second, newer approach, also embraced by Ergomania, the VUI is the primary service, designed specifically with a voice-based approach. Examples are Apple’s Siri or Amazon Echo.

Designed for mobile devices or IVR systems?

So, when a business needs a VUI, the primary question is what approach-based design professionals should follow: used in mobile devices or IVR systems?

Despite being a rather dated technology IVR, it can even be very effective. In case of a customer service, for example, this is still a proven method, especially because users know it well, can operate it, and are not averse to it. At the same time, it is extremely important for designers of an IVR to avoid fundamental flaws and usual pitfalls.

It’s no coincidence that many interactive response systems have been the subject of public hatred. Blog posts have appeared about how to get around the automation and get to live customer service immediately.

The mobile approach has to be primary when designing a VUI

The aim of the IVR would precisely be to relieve customer services, since many recurring questions have already been answered. In this case, there is always a flawed design or rather a flawed approach rearing its ugly head in the background: the IVRs are created to represent the interests of the company and the user is just a necessary evil in the equation.

In the case of mobile VUIs, customer demand is the primary driving force, but we can still come across two types of approaches:

- The Voice User Interface is a sound version of the graphical / text interface,

- According to the purely mobile approach, the VUI is a stand-alone interface.

When designing modern VUIs, one of the serious challenges is whether to have graphical, visual support or not , the other is whether it would be interactive, i.e., whether the customer can communicate with the VUI. The former greatly facilitates the design and implementation of the VUI, while the latter makes the implementation more difficult with orders of magnitude.

Advantages of a multimodal interface

When the user is presented with a choice between using the graphic interface, the text field, or voice commands while interacting with a system, we are talking about a multimodal interface. The best example of this is perhaps Google’s search engine, where you can type text, but you can even search for things by talking.

Multimodal interfaces could reach the widest possible user group: they could be used by everyone, including disabled users and even by the visually or hearing impaired. If the system is equipped with a multi-mode VUI with iconic interface, even those who are illiterate or do not speak the given language could still navigate it.

Exploiting the visual benefits of mobile devices is therefore a self-evident element of any modern VUI – in this case the aspects applicable to the graphical interface are also given significant emphasis. All this is well-known to Ergomania coming from the world of UI design. But what if we want the user to have an actual dialogue with the system? This is when dialogue-based planning takes the stage.

Dialogue-based planning of the VUI

Although dialogue is completely natural among people, computer system developers have been struggling with speech recognition and speech interpretation for decades. While the former is in a fairly advanced state (think of software that converts live speech into text, like Dragon, one of the industry-veterans, or Amazon Transcribe), the latter is still in its infancy. We mean literally.

The world’s most advanced civil systems (like Apple’s Siri or Google Assistant) are pretty much like small children: they are capable of the most basic dialogue, but we cannot expect to have complex, elevated-minded conversation with either of them.

Most dialogues through VUI are actually answers to a singular question

Contrary to popular belief, most current dialogue-based interactions are single circle. A circle means a question and answer unit. For example, if someone asks Google when their next meeting starts or which is the shortest route to their destination, and gets an answer they are content with (meaning: no follow-up questions), we are talking about a single conversational circle.

In general, most advanced systems now are capable of handling singe conversational circles. A multitude of problems begin when users have a purpose other than one-time information acquisition. We’re still decades away from artificial intelligence as it is shown in sci-fi movies (like Tony Stark’s Jarvis, or the Samantha of Her).

Base your VUI design on the user

In case of a dialogue-based interface, it is always worth starting from the user. If we want our system to be able to go more than one circle (which gives a fairly large market advantage to a given company as it is rare as hens’ teeth), it’s worth starting the planning phase by asking what could the customer want in the second circle.

It goes without saying that we should not force the user into a second circle. Rather give the user a chance to continue. Most of the dialogues would be single circle as expected, but if it gets around, that there is a system you can actually talk to, it would encourage many people to at least give it a shot, and experience the capabilities of the VUI freely. The system must be able to remember what was said earlier in the dialogue, or – if we are aiming even higher – not just the current conversation but previous dialogues as well. Achieving this requires a sophisticated contextual system capable of interpreting and understanding context.

Let’s say, we ask the VUI when a famous person was born, and then, in the second circle, we only ask where they were born, without naming them (as we would do in real life convos). A well-designed VUI remembers that the user is curious about the birthplace of the famous person mentioned in the previous circle.

Tools for VUI design

Finally, let’s talk about the tools currently available that have already proven their usefullness in VUI design. The easiest tool is paper and pen. All we have to do is jotting down a sample dialogue. This is essentially a small excerpt from the interaction between the user and the VUI. It’s pretty much like an excerpt from a script in which two actors talk to each other.

Let’s prepare for a multi-circle dialogue!

The key is to think through all the options that the VUI wants to serve. Let’s say we’re planning a sit offering a new kind of weather forecast. While a VUI capable of complex dialogue would indeed be a market-leading solution, it is hardly necessary to be able to converse about Hegelian philosophy or the latest pop hits.

However, it is of utmost importance that this particular VUI should be able to carry a conversation about the weather. For example, in the first circle the user asks what kind of weather is expected on the Adriatic on a specific date, the system gives them the temperature forecast and whether rain is expected. The VUI also recognizes that it was asked about the beach, so it already knows that the upcoming question about the sea temperature is about the sea temperature measured at the place the first question referred to.

Use (chatbot) design software

When our paper-based rough draft is ready, and we know what kind of VUI we want, it is worth implementing specific tools for the actual build. This is the point where we would realize, our best bet is to go for software invented primarily for the development of chatbots. It is due to the fact that chatbots target exactly what is essential for modern, dialogue-oriented VUIs: multi-round interaction between man and machine. The most popular of these are Dialogflow by Google, Adobe XD and Voiceflow.

Dialogflow is one of the strongest competitors

Dialogflow is a natural language comprehension platform that facilitates the design and integration of a conversation-based user interface into any mobile application, web application, smart device, chatbot, or even IVR system.

Adobe XD offers a complex tool

Adobe Experience Design promises much more than Dialogflow: a complex toolkit that meets all the needs of UX/UI designers. From a simple sketch to a ready-made design, you can create interactive prototypes for both mobile applications and websites.

Voiceflow does not require programming knowledge

Voiceflow was created by an independent studio called Storyflow. The software helps the design and implement sound-based applications from sketch via prototype to finished product. It makes it easy to create a voice-based system for Amazon Alexa and Google Assistant without any existing coding knowledge.

Conclusions

When designing Voice User Interfaces, it is always necessary to decide what approach the VUI should take. IVR systems still have a place on the market, but at the same time it is clear that people prefer multi-mode interfaces and dialogue-based systems (e.g. digital assistants and chatbots).

If you rather entrust the burden of planning and implementation to a professional, please contact Ergomania, the Hungarian market leader in UI design.