Preserving User Trust: Ethical AI Use in UX Design

When the financial use of artificial intelligence (AI) is discussed at conferences, it’s not uncommon to hear voices saying, "Well, I'm not very keen on an AI having access to my financial data, let alone my account; I don't trust it." This is understandable. It's also understandable that companies and receptive users want to enjoy the countless advantages, innovations, and what we can call the paradigm shift that AI promises. As AI is here and here to stay, this contradiction raises issues that need to be addressed.

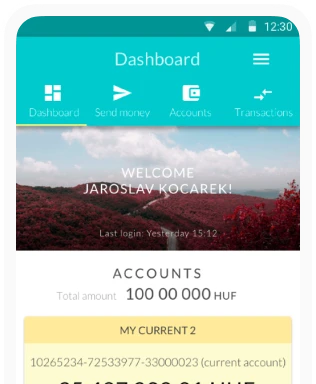

What can cause distrust of AI solutions? The press once widely reported that AI experts have "no idea" what happens inside the system. For instance, Professor Roman V. Yampolskiy's "black box" analogy became quite famous (here's a Vice article as an example) regarding the problems that can arise if we don't understand precisely what an AI does and why, and what biases and errors it might have. While it's an exaggeration that experts have no idea, it's a fact that the average user certainly doesn't, and this can be a reason for their suspicion. For example, just think of a situation where someone's loan application is rejected without a clear explanation of why. Or to mention a less critical situation: How do I know I can trust an investment offer suggested by the AI living in my banking app?

In the article "Distrust in Artificial Intelligence," published in Dædalus, the journal of the American Academy of Arts and Sciences, researchers identified this very "inscrutability" of AI as the main cause of distrust. They also pointed to Explainable AI (XAI) as a possible solution – but we will return to this.

AI Bias and Trust: Learning from Past Mistakes

Another often-cited factor of distrust is that AI can be prejudiced or biased. An early chatbot fiasco, the case of Tay developed by Microsoft, is a good example of this. Since it was programmed to learn from user inputs, people on Twitter with their twisted sense of humour managed to turn it into a Holocaust-denying racist in just a few days. Although this was a case of intentional "mis-education," the problem is real. An AI model learns from the vast amount of data fed into it, identifying patterns within it. If this data mass carries certain biases, for example, for historical reasons, then the result will also reflect this. In a notorious case, Amazon’s AI-based recruitment tool systematically disadvantaged resumes that contained the word "women’s" (e.g., "captain of the women's chess club") because the system had learned from a decade's worth of resumes submitted predominantly by men, which it deemed successful.

The Dædalus article also recalls the case when Airbnb acquired a company called Trooly, mainly for its patented "personality-analyzing" AI algorithm. Many were outraged (despite Airbnb's claim that it does not currently use the software). The algorithm attempts to estimate how trustworthy someone is using social media, blogs, and various databases. The public, for completely understandable reasons, raised a finger and said, "Now, let's not do that."

How do I know I can trust an investment offer suggested by the AI living in my banking app?

How do I know I can trust an investment offer suggested by the AI living in my banking app?AI, Autonomy, and the Illusion of Consent

The article also touches on the problem that AI, despite all its positive capabilities, can undermine the feeling of user freedom or autonomy by pre-selecting options or automatically making certain decisions. This is exacerbated by the illusion of consent: the long, legal, jargon-filled privacy policies that users accept without reading do not constitute genuine consent.

The problem can be well illustrated by the so-called autonomy paradox: Users are attracted to the convenience offered by AI (e.g., predictive text input, automated recommendations), but each layer of automation takes a decision point away from the user. The more seamless an AI experience appears, the less actual decision-making freedom the user may have.

Challenges to Trust in AI Adoption

In addition, the American National Academy of Public Administration (NAPA) mentions the general distrust that characterizes our times, the polarization of societies, the easy spread of false information on the internet, and the resulting doubt in official organizations or science itself, all of which also seeps into the perception of AI. (For example, that AI is "just another tool in the hands of the powerful.")

All these reservations can naturally be even stronger in the financial sector. We entrust these companies with our money and often our most sensitive data, so transparency and trust are of fundamental importance. It is no coincidence that the International Monetary Fund (IMF) also dealt with the issue in a lengthy report, in which it emphasized the importance of state regulation and strong ethical guidelines.

The Anatomy of Distrust in AI

The distrust also stems from the difference between human psychology and the nature of complex AI systems. Humans fundamentally seek cause-and-effect relationships to build mental models of how the world works. This provides a sense of predictability and control. In contrast, many advanced AIs – especially deep learning models based on neural networks – are often opaque in their internal decision-making processes, even to the engineers who created them. This opacity prevents users from forming a coherent mental model of the system's logic.

Let's look at the main causes of distrust.

The common belief is that the key to increasing trust is to make AI systems more transparent. The logic follows that if users understand how an AI works, they will be more likely to trust it. However, a landmark study by researchers at the University of Pennsylvania and the Massachusetts Institute of Technology reveals that this assumption is not entirely correct. The research clearly shows that for lay users, trust is much more influenced by demonstrable performance and reliability than by explanations of the internal processes. Users build or lose trust based on the system's tangible results and successful operation, not by understanding its algorithms.

For lay users, trust is more influenced by demonstrable performance and reliability

For lay users, trust is more influenced by demonstrable performance and reliabilityHow Can UX Help Solve the Trust Crisis?

Building trust is not a feature that can be designed onto an interface; it is a fundamental property of a system that is reliable, accurate, and fair. The role of UX design is to communicate this internal integrity to the user and to provide them with practical tools.

1. XAI and Communicating Performance

As we've seen, the main driver of user trust is demonstrable performance. UX, therefore, must design interfaces that clearly communicate the successful results and concrete benefits of the AI. The role of XAI becomes critical here, but not in directly convincing the lay user. XAI is a tool for building a trustworthy system, which helps developers in debugging and ethical auditing, and allows expert users to make informed decisions by integrating AI suggestions. The task of UX is to present these explanations in the right context, in an understandable way.

In practice, this can be realized in several ways.

- Local explanations show why the user received a specific, personalized recommendation (e.g., "We recommend this because you recently viewed product X.").

- Feature importance visually ranks which factors had the greatest weight in a decision (e.g., in an insurance premium calculation, driving history had a high impact, while vehicle age had a medium impact).

- Counterfactual explanations provide concrete, actionable advice, for example, by showing what would need to change for a rejected loan application to be approved.

- And example-based explanations can show an analyst a previous, similar case that helps them understand the logic of the current decision.

2. Designing User Control and Meaningful Friction

Design also has a huge role in strengthening the feeling of user freedom. At the UX level, solutions such as the adjustable autonomy of AI can help balance this. However, designers must consciously manage the autonomy paradox. The solution is to design meaningful friction: strategically re-inserting decision points where user autonomy is most critical (e.g., data-sharing permissions, financial transactions). In addition, it is essential to include undo buttons, feedback options, and human review for sensitive decisions.

3. Handling Prejudice in Practice

India’s national AI portal's (INDIAai) material also highlights the importance of training on diverse data and human supervision. In practice, this means the following:

- Inclusive research and testing: Actively involve users from different demographic, cultural, and ability groups in all stages of the design process.

- Robust feedback mechanisms: Provide easily accessible channels for users to report perceived biases or errors.

- Prejudice audit and "red teaming": Regularly, with formal audits, examine the data and models, and conduct red team (hostile) exercises where a dedicated team tries to break the system to uncover unintended harmful consequences.

Regulation and Ethical Frameworks

By now, official bodies and industry leaders have recognized the importance of regulation. A remarkable consensus has emerged in the various frameworks on the basic principles of responsible AI.

The European Union's AI Act primarily focuses on managing risks. In the case of the AI principles adopted by OECD countries, the emphasis is more on the protection of human rights and democratic values, and the recommendation issued by UNESCO focuses on supporting inclusive, peaceful, and just societies.

The frameworks developed by industry leaders (e.g., IBM, Google) are also based on similar principles, suggesting the emergence of a kind of global standard. Although their wording differs, the common points are clear. Almost all frameworks emphasize fairness and the fight against bias, transparency and explainability, clear accountability and responsibilities, strict data protection, and human centricity as a guiding principle.

It is user trust that really pays off

It is user trust that really pays offEthical AI: The Business Model Built on Trust

Although it is easy to see all these regulations and recommendations as hindering development, the reality is that the ethical use of AI in UX is not only morally right but also commercially viable. Principles alone are not enough. Ethics must be embedded in everyday operations:

- Human-Centered Design (HCD) as the basic philosophy: An AI system cannot be fair if it was not designed with a deep, empathetic understanding of the users. Transparency is also meaningless if the explanations are incomprehensible to the user.

- Multifunctional teams: Ethical AI cannot be the responsibility of a single department. Lawyers, ethicists, and subject matter experts must be involved from the very beginning of the development process, alongside AI engineers and UX designers.

- "Builders, not judges" mentality: Ethical experts should not be gatekeepers who pass judgment at the end of the process, but members embedded in the product development teams who help to integrate ethical considerations into the system architecture from the very beginning.

As we have seen, it is user trust that really pays off. If we win this trust with a user – because the product, the interface, and the use of AI are transparent and understandable, not biased, and because it maintains the feeling of user autonomy and is visibly committed to fairness – we will ultimately get more and more committed users who are also increasingly likely to recommend our service or product to others.

The ethical use of AI can contribute to the brand's reputation, and of course, prevent legal problems that may arise in the still dynamically changing regulatory environment. As the American Academy puts it: "Entities must compete in the marketplace for trust and reputation, face ratings from external observers, and contribute to the development of industry standards. Trust must be earned."