More Popular Than Expected: The Secret of The Best Voice-user Interfaces

Personal assistants communicating with the VUI, or voice-user interface, have become much more popular than the largest industry players expected them to be. Amazon, Microsoft, Google, or Apple all have their digital assistant (developed strictly in-house) on offer, and to tell the truth each one of these is better at something than its competitors.

Currently there are tools available on the market that provide a somewhat better customer experience, despite the fact that their rivals have decades of experience in the world of voice control and voice recognition. Their secret lies not in marketing or design, but in the approach to the task.

Our article shows you how Microsoft Cortana, Google Assistant, Siri and Echo have become market leaders (and how their popularity have risen), and how they managed to stand out even from this really crowded field.

The dawn of digital personal assistants

The idea of sound-based interfaces and the idea of having certain systems controlled by voice interaction is not new. Not so much that the world of ancient and modern tales is full of machines that are controlled by sound.

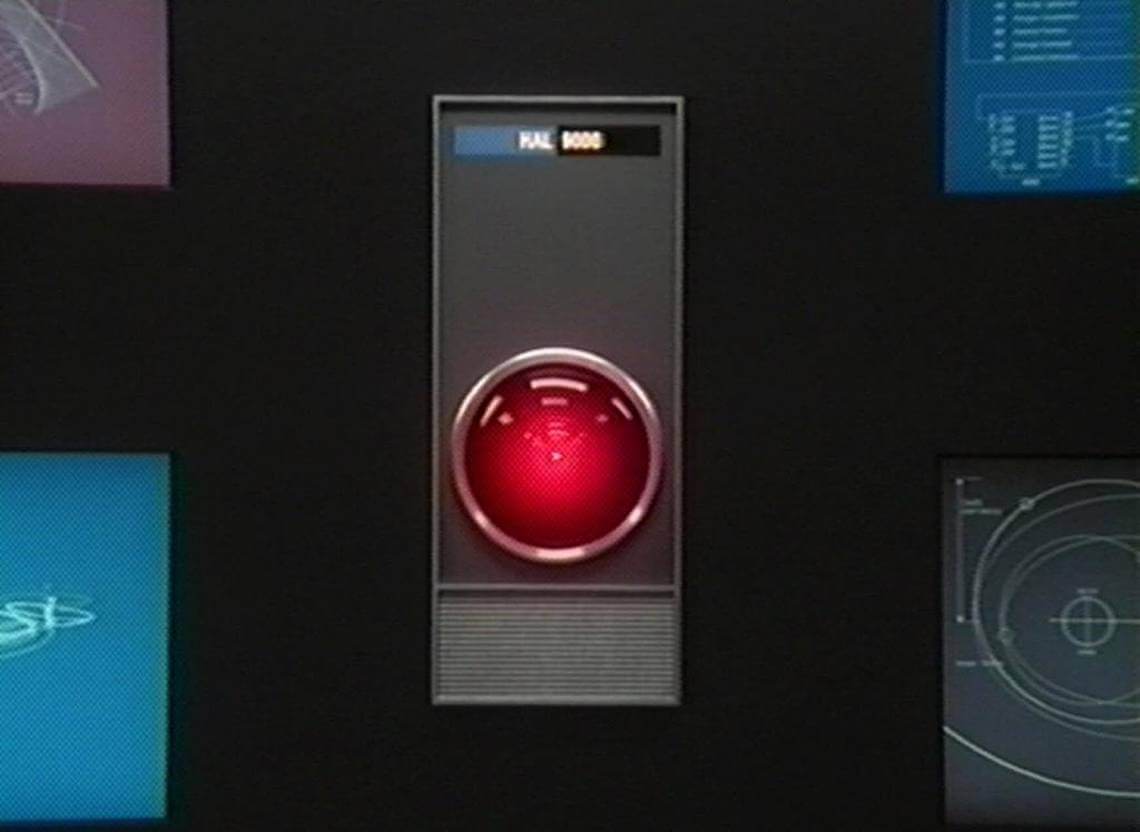

Ali baba opens the treasure cave door with a voice command: „Open Sesame!” The Wishing Table provides food upon hearing „Cover thyself!” HAL of 2001: A Space Odyssey and all androids seen in sci-fi movies and series have a VUI, what’s more, a contextual dialogue-based VUI: they are able to interpret questions based on previous interactions.

However, it was only at the end of the twentieth century that the technology actually matured enough to give birth to real voice-controlled, digital personal assistants. Josh Clark’s Magica UX idea is one that merits a shout-out: it is a system that basically uses anything as an interface in „the Internet of Things” systems, by controlling everything from a distance with mere words and gestures.

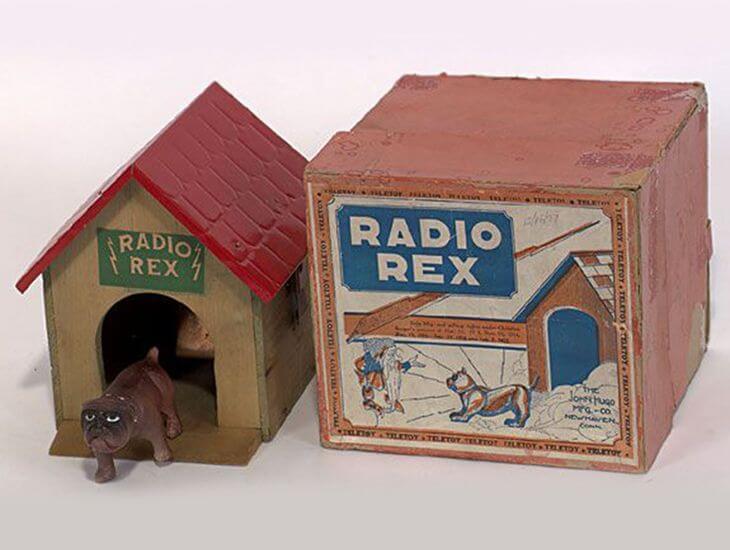

Of course, voice-controlled devices or ones that were capable of interaction appeared much earlier: the first was a game called Radio Rex. It was a dog in a doghouse „who”, upon hearing its name being called, recognized it, and came out of his house – all this in 1911. Bell Labs came up 40 years later with the first truly usable voice recognition machine, Audrey, named after Automatic Digit Recognition system.

The first chatbot, called ELIZA debuted in 1966, allowing interaction between man and machine. Ten years later the U.S. Department of Defense developed “Harpy,” a bot able to recognize a thousand words.

The nineties brought a breakthrough in the line of digital assistants

In the eighties and nineties, IBM, Dragon, and Philips, among others, came up with significant improvements in voice recognition and voice-user interaction solutions: the first real smartphone can also be attributed to the IBM name.

It was called IBM Simon, which appeared on the market in 1994, had a full touch screen, could sent emails and faxes, and even a drawing program and a game among the applications.

The Belgian company called Lernout & Hauspie also achieved significant advances in speech recognition. Their technologies were later acquired by Nuance Communications, the firm that also owns Dragon.

The first real digital assistant and chatbot was SmarterChild from Colloquis in 2001: the users were able to interact with it through contemporary chat programs (AIM and MSN Messenger). It was able to answer simple questions or record an appointment in a calendar. True, all these happened through text commands and interaction – it was still unable to recognize sound.

The first VUI-capable virtual assistant made his debut with Siri

However, if you’re want to know about the very first digital personal assistant with an actual voice-user interface, you might want to turn on any post-4S iPhone because you’re listening to it there.

Siri was introduced by Apple back in October 2011. Of course, as is customary in the modern IT industry, this was not Apple’s own invention either. They just acquired and further developed Siri Inc.

Siri is introduced in 2011

Siri and Cortana: enter the “girls”

Originally able to perform simple tasks following voice-user instructions like sending an SMS or making a phone call, Siri was soon developed into a complex assistant capable to browse the net or give restaurant referrals.

Apple was heavily criticised from the beginning for Siri not always being able to interpret questions, or the annoyance of having only one reminder to be set via the assistant. Users flocked back to using text mode instead of voice control for it was far more reliable,

Despite all of Siri’s faults, the assistant became extremely popular, and soon Apple’s two biggest rivals, Microsoft and Google, also launched their own VUI-capable virtual assistant.

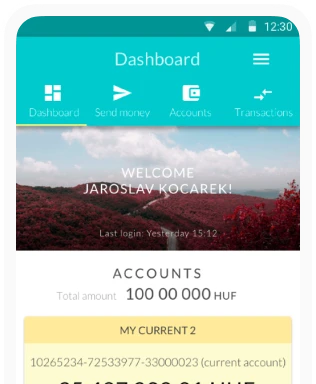

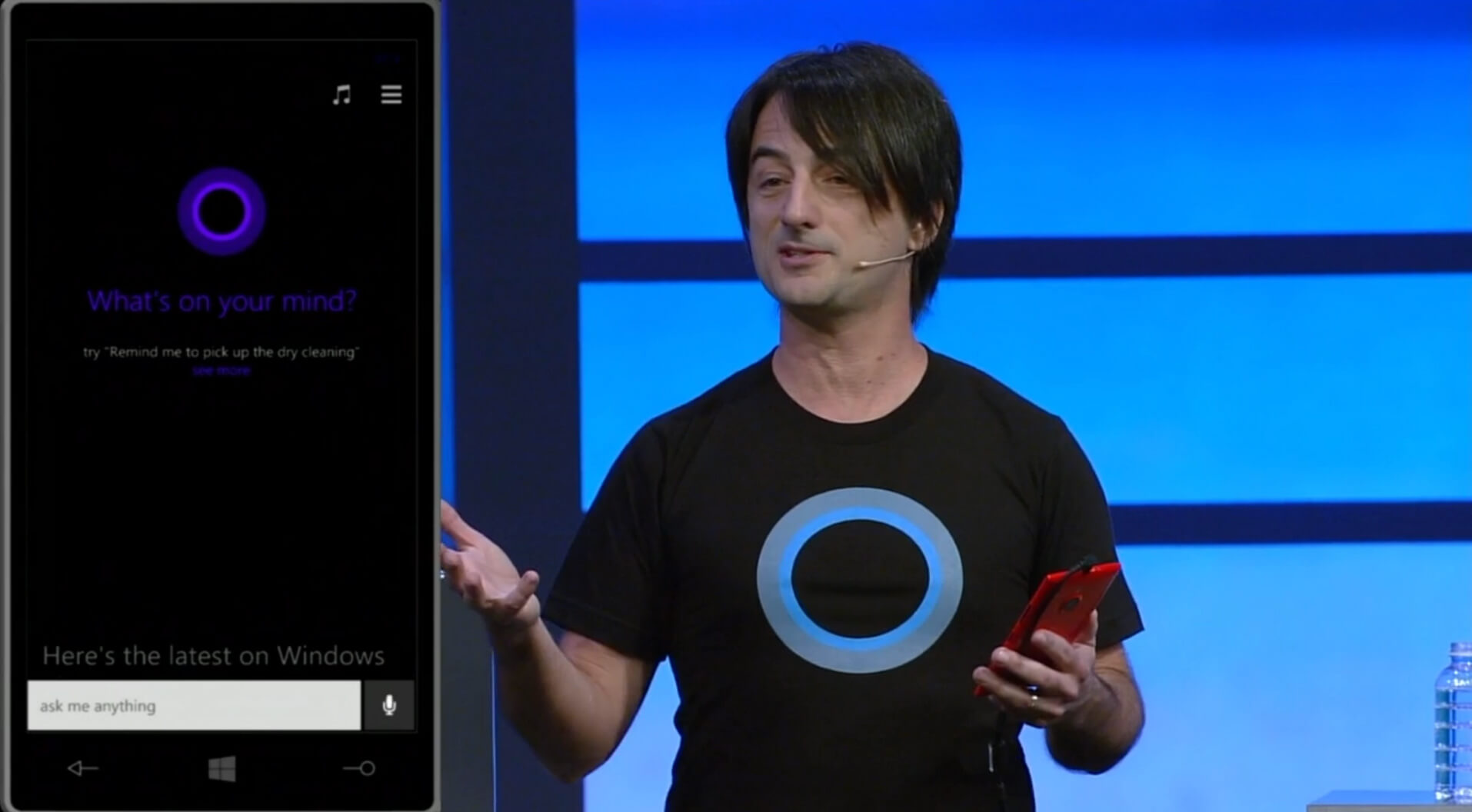

Microsoft Cortana was presented to the general public in 2014. The names were familiar to the gamers, as the digital assistant was also called Cortana in the game called Helo (also released by Microsoft in 2001), and it’s American release was voiced by the same actress, Jennifer Lee Taylor, who lent her voice to Cortana in the game.

Google’s assistant is gender-neutral and can carry a conversation

Google Assistant was launched in 2016 and, unlike its competitors, received neither a male nor a female name. It can be controlled via one of the most advanced VUIs and text interfaces. At first its knowledge base was on par with Siri’s, but by now it has grown into a complex system capable of handling a variety of devices and is available on more and more smart devices.

In terms of basic functionality it matches Google Now, but its huge advantage is being capable of responding to queries in a synthesized voice due to the Duplex system added to it after launch. It even manages its tasks by imitating human speech – for example, it is capable of booking a table at a restaurant so that no one would realize they were talking to a machine.

The rather futuristic picture painted above is somewhat nuanced by the fact that a quarter of the calls mentioned were actually made by people; and in 15 percent of the dialogues, people had to take the place of the machine during the conversation.

Attentive assistant instead of warrior girl: Amazon Alexa

Amazon also made its own VUI-based assistant, Alexa, available in 2014, although its measurable success only came about in 2016 when Echo was launched, a device that can also act as a real home control center, in addition to being a personal assistant providing a number of services with pure voice-user control.

How is it possible that although Amazon only made its debut with Alexa and Echo in 2014, from 2017 onwards this online commercial giant began providing an open design service that would allow anyone to implement their own VUI-based interface design in digital assistants? What makes a VUI-based device really outstanding?

Amazon’s Alexa is moving into Echo

The list of services provided by Echo ranges from playing music and audiobooks via making to do lists to managing smart homes. All the things Siri or the Google Assistant can do.

The key to its popularity, the main reason Alexa became more successful through Echo than Siri is that the latter was only designed to control “iProducts”. Unlike Siri and Google, Echo is a targeted development for interaction through VUI, putting voice-user management above everything else!

The designers of Echo based the user experience on real human dialogue and specifically designed the VUI knowing that at first the system could only be controlled via voice commands. It was therefore vital that Alexa understood human speech perfectly and was able to interpret it in context, to engage in dialogue essentially.

Hound: interpreting the context

SoundHound, a company founded in 2005, launched a software called Hound in 2015. It, not unlike Alexa, is also capable of understanding natural human speech with far more complex utterances than Siri could. At its launch it already interpreted full context for the utterances, understanding the real intentions of the users better, since it only used the spoken question as a reference point.

The success of Hound is perhaps best illustrated by the fact that companies such as Tencent, Nvidia, Samsung, Daimler or Hyundai have started financing developers.

How to build a VUI from the ground up to be really successful?

The examples cited so far may illustrate well what the decisive difference is between digital assistants. One school of thought represented by Siri and Cortana, is characterized by the subordinate VUI, while the other, with Alexa and Hound in its ranks showcases the primacy of the VUI.

When it comes to VUI design, Ergomania also primarily represents the latter, since all the relevant trends show that users are much more willing to use VUI-based devices that are primarily designed to be voice-controlled, contextual systems.

Here are the essential aspects of creating a solution akin to Hound or Alexa.

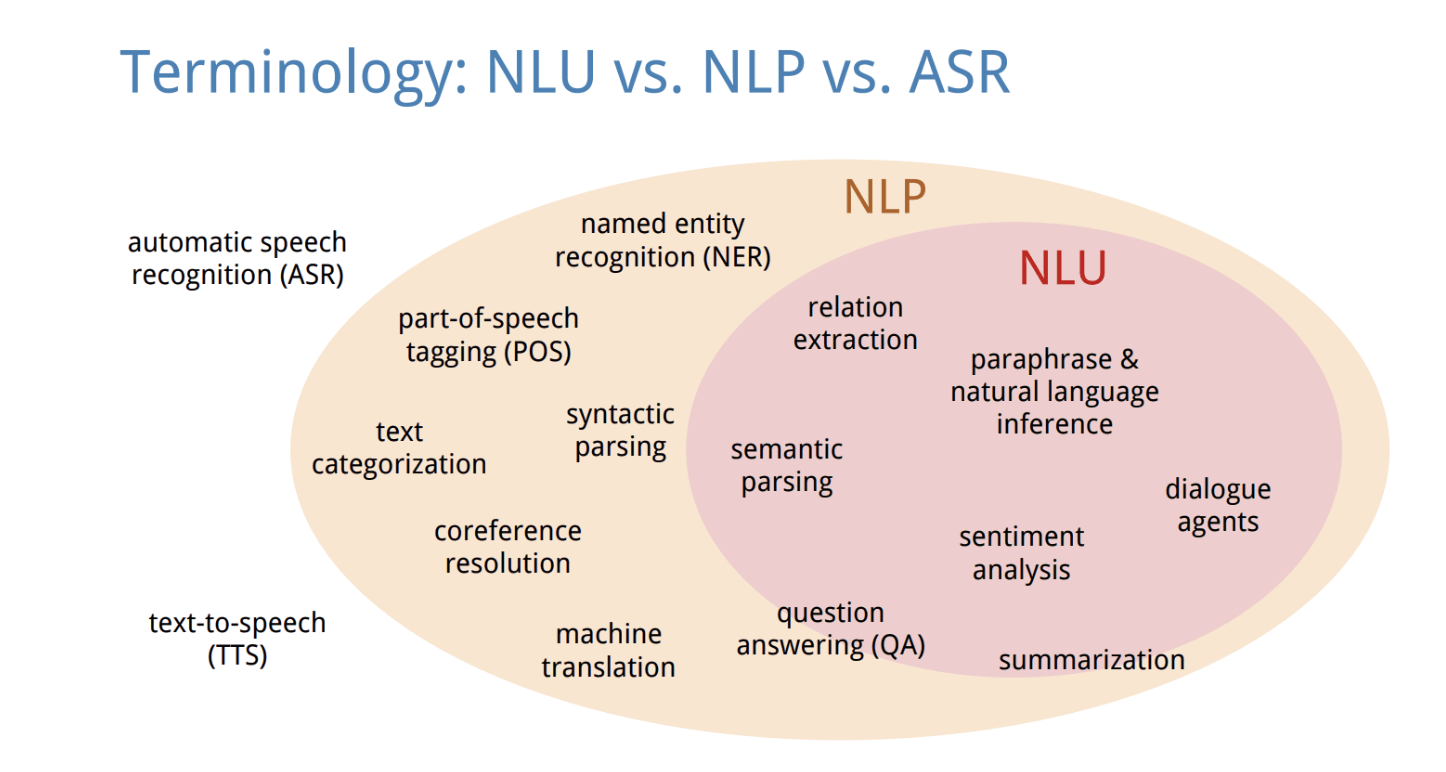

Voice recognition is based on NLU instead of SR

Natural-language understanding (NLU for short) differs from speech recognition (SR) in that it examines the text in its interpretive and contextul environment. The recognition of human text and the understanding of words is so advanced that today the emphasis is much more on the NLU than on the “perception” of spoken words.

For questions that require one-word answers, the best systems are also able to handle non-standard answers. For example, instead of “yes”, these accept “of course”, “yeah”, and “you bet”, answers with the same meaning.

Dialogue management

When Ergomania is designing a really good, flexible VUI, they put more energy into dialogue management. This includes all the way the system handles the information and choices already learned during the dialogue and what should happen next to achieve that goal.

The system therefore keeps track of what additional information is needed and the order in which to ask for it. It’s worth imagining this as an order process where several factors depend on the customer. For example, when buying tickets, the system asks what type of ticket is needed, what is the validity period, what identification number is to be used, etc.

The classic solution is the static order, when the customer cannot deviate from the order process – the modern VUI approach, on the other hand, allows the customer to decide on the order in which the information is passed, the system is to keep track of what is missing and ask about it.

The role of context

At Ergomania, we encountered a VUI development request on several occasions, where managing the context was one of the main challenges. Context is critical for the operation of chatbots and virtual assistants: they have to handle the information said earlier and also understand the user from incomplete utterances often figuring out what they mean before they eve say it.

A system may be prepared to handle food orders (this has become quite common during quarantine), and it is then worthwhile to find out in advance where the user is in order to recommend them restaurants based on their location.

If the system remembers the information provided earlier, in case of different answers to a regularly recurring question, it may react differently by referring to previous conditions.

For example, based on customer answers to the question “how did you sleep”, the system can suggest solutions, based on previous answers: “You slept well before. Something worries you?” These interactions significantly increase customer experience because for the user, the system will be seen more than just a “silly computer”: rather, a confidante, who pays attention to them.

It’s a matter of calling

It seems like small potatoes, but the word used to activate the system is actually a very important matter. “OK, Google,” or “Alexa,” are two great examples of the need for an activating voice command that is:

- Easy to remember

- Very rare in live speech

- The system understands it easily

- Cannot be confused with other commands

Amazon, for example, has spent a lot of resources on making the word “Alexa” to be understood well by Echo: since the word Alaska is very similar to it, if the system is hypersensitive, the number of unwarranted activations (we are talking about an American target market) would go through the roof; on the other hand, if it expects an accurate pronunciation, then customer frustration would kick in as soon as the word Alexa is needed to be said more than once.

There was also a problem with pronunciation and understanding “OK, Google”: the sounds ‘k’ and ‘g’ are so close that anyone who is not native English is unlikely to pronounce it correctly therefore the system may become confused whether they wanted to activate it or not.

It is quite clear that the design of a modern, voice-user interface requires far more knowledge, technological background and preparation than even ten years ago. If you need a reliable system, contact Ergomania, the interface expert.