Everything becomes manageable without touch: the effect of COVID-19 pandemic on VUI(voice user interface) developments

The COVID-19 pandemic has fundamentally changed not only our social behavior but also the way ordinary objects are used. In a situation where touching different devices carries a risk of infection a hand sanitizer is often a rarer commodity than truffle, as people think twice about what to touch.

But smart devices have become part of our lives to such an extent that checking messages has become just as automatic as scratching our noses. And in times of a pandemic, both can be dangerous.

Voice-based treatment is the future

The best solution, of course, is to be able to use our phone without having to touch the screen, or to buy a ticket or pass from a ticket machine without touching the screen. By eliminating the need to touch various surfaces during shopping, one of the main routes of the spreading of COVID-19 can be blocked. The easiest way to do this is to allow voice control and the user interface that supports it (VUI) as widely as possible.

What is a VUI?

VUI means Voice User Interface, an interface that allows users to communicate with a given system through voice and speech commands. The best-known examples of VUI are virtual assistants such as Siri by Apple, Google Assistant, Microsoft Cortana, or Alexa by the world’s largest online store, Amazon.

VUI is a particularly useful solution

The main advantage of a VUI is that it makes it possible for the user to control various devices without the need to touch the interface or show their faces. There are a number of situations and areas in life where voice-based treatment is more effective for physically able users, such as driving in general or handling public utilities (such as ATMs) during a pandemic.

Contact-free use has been present for longer than we might think

If we ask an average person in the West when he first met a VUI, he would probably be puzzled by the question. On the other hand, if we ask whether you have set a voice command on any of your cellphones, chances are you would say yes.

As early as 1999, cellphones, like the Ericsson R388 already offered voice-based dialing, but machine voice synthesis technology appeared decades earlier.

In fact, Bell Labs’ staff began working on the problem in 1936, and its patent was registered in 1939. In 1962, IBM introduced its machine capable of recognizing sixteen English words, and in 1993, Apple launched the proto-VUI called Speakable items geared for everyday users.

Since then technology has undergone astonishing development – and the current COVID-19 pandemic has given an even bigger boost to innovation.

[Prepare your business for non-contact use with professional support from Ergomania.]

Present and future of voice management

The general public was first introduced to sound-based computer management in sci-fi movies and TV series – or at least to how filmmakers adapted VUI. In these instances it was possible to communicate with 2001 Space Odyssey’s HAL or Star Trek’s Enterprise spaceship.

Naturally, these were only works of fiction, so screenwriters didn’t have to cope with the actual implementation of what they had thought up and they took some features for granted that would cause serious headache for designers in the real world.

The design of a sound-based interface that meets modern needs is far more challenging than the design of the GUI, the graphic interface. The reason for this is simple: vision dominates the human senses, with some estimates saying that 80% of the stimuli reaching the brain arrives through the eyes.

In addition, there are several ways to bridge language barriers in the case of the GUI – the most widely used one is displaying icons or pictograms.

When designing a VUI, however, it is a huge challenge, as opposed to graphical interfaces, the VUI itself does not provide visual clues. Users cannot be guided with images or animations within the VUI, nor there is a clear sign of what the interface can do for them or what their options are.

They can’t even see the surface at a glance, they are forced to follow linear, voice-based information. Of course, all this is only true for purely voice-based interfaces, like the ones implemented by telephone customer services.

Understandably, each company tries to combine its VUI with a graphical interface. Over time, however, people living with disabilities were able to provide the greatest help to ergonomic professionals.

Voice-based solutions initially benefited the blind, the partially sighted and the disabled

While for everyday users VUI is more of an added convenience, for the blind, partially sighted and those living with severe disabilities, voice control allows the use of IT-tools: voice commands function as a hand, and text reading replaces graphic display.

Most developments have also been geared towards their needs, so many people living with various disabilities have access to the Internet or can even make calls on cellphones.

Microsoft, for example, first integrated speech recognition into the 2002 edition of their Office suite. Come 2007 and Windows Vista, speech recognition was a default feature in a major operating system. However, the technology behind speech recognition is at least as complex as image recognition or machine translation.

The number one culprit behind it is context. People are learning the contextual rules of their mother tongue for at least ten to fifteen years from their birth. This allows us to recognize sarcasm, irony, etc. – since the meaning of singular words and phrases can be completely different depending on the context.

Machines learn how to distinguish a water tap from a red tube or an apple from a peach. For a voice-based system the greatest challenge is to recognize spoken words or to interpret continuous speech.

[Sign up for our newsletter here to always be among the first to know about ergonomic news!]

Voice-Based Control at Home: Advantages and Disadvantages of Artificial Assistants

When you type “when was Gandhi born” into your search engine, the query is easily interpreted by algorithms and the answer is given in a matter of seconds. But if the search engine hears “when were that Kante or Gandi or whatchamacallit born” then the task becomes exponentially difficult for the algorithms.

It would be unreasonable – or wishful thinking – to expect everyone to ask their questions slowly or issue their voice commands in a clear, well-articulated manner. Humans don’t work like that. They are nervous, tired, excited, overwhelmed, happy, muttering, sleepy, etc.

And if we add that in these COVID-19-infested times there are many people talking through a mask covering their mouth or from behind a plastic face shield or protective clothing, we will have a pretty good understanding of the complexity of the task ahead.

Fortunately, the blunt of the work has been done by the service industry because these challenges have been addressed by the Silicon Valley giants for years. The everyday user started to think differently about VUI only after the release of Apple’s Siri in 2011.

Siri, Alexa and Cortana: help or spy?

Ever since there have been secrets in the world, those not privy to them have been trying to find them out. And those who suspect a conspiracy behind every technical innovation tend to believe that governments – and nowadays large corporations – are trying to eavesdrop and observe them.

The various virtual assistant systems are facing the same level of distrust – and their cause is further hindered by the IT giants themselves who seem to do everything in their power to fuel conspiracy theories. It is true, however, that digital assistants actually monitor their environment and even pass on text excerpts to third parties, but for completely different reasons.

To switch on a voice-activated system contactless (with a mere voice command) it is necessary to have the system continuously listen for voice commands, but it is not necessary to record anything at all, let alone to transmit anything to anyone.

The most important aspect of the development of the VUI is the recognition of ordinary human speech. In order to achieve this, indefinite amount of sound samples must be processed and interpreted. During this process, some, or all of the speech samples will be sent by the virtual assistant for analysis, but it does not mean that data processors should have access to any personal information.

Many esteemed newspapers and news outlets had their say regarding the matter in several occasions. The Guardian (Hey Siri! Stop recording and sharing my private conversations and Alexa, are you invading my privacy? – the dark side of our voice assistants), CNBC (I asked Siri, Alexa and Google Assistant if they’re spying on me – here’s what they said), USA Today (Hey Siri, Google and Alexa – enough with the snooping), or most recently Slate. com (Which Smart Speaker Should You Trust Most-and Least?)

It is certain that with the introduction of GDPR on May 25, 2018 and following many data protection scandals, the tech giants went head over heels to regain the users’ trust by introducing serious improvements and changes to their systems.

Home assistants can take over the role of browsers

Without having to reach for a phone let alone a desktop computer or laptop, we can use just a few voice commands to find out what the weather is like, book appointments, get stock market news or sports results. Since 2018 we can even do our shopping through Alexa for instance.

All this becomes especially important during a pandemic, when, for hygienic reasons, users want to avoid contact with objects.

The spread of the VUI will accelerate in the near future

When designing voice-based interfaces, it is important that the system clearly describes the possible interaction options. Naturally, if there is a possibility of embedding VUI in a graphic interface, it helps a lot, but it is a basic ergonomic expectation that a VUI should fully function on its own.

The VUI needs to tell the user what functions they can access and how to use each service, but at the same time it does not need to overwhelm the user with information, only telling them what they can memorize.

One of the foundations of modern UI design is the voice-based approach

During a pandemic it is also worth optimizing said presentation process because “social distancing” (the term should be social cohesion and physical distancing, just saying) allows fewer people to use each service slower.

One of the fundamental expectations from a VUI is therefore to decipher not only spoken language (especially through a filter or mask), but also to inform users effectively and intelligibly about the type of voice commands they can issue and the range of interactions they can perform through these commands.

Healthcare workers and patients may benefit the most

During the current COVID-19 pandemic, contactless solutions have become extremely important even if the virus itself does not spread through (unharmed) skin – but it does through the mouth, the eyes, or an open wound.

A lot of people opted to use gloves, mostly not tactile ones, so using a touchscreen has become quite troublesome of late.

Moreover, the infection can still be transmitted by reaching into one’s eyes or touching their faces with dirty gloves. Contactless operation in hospitals and clinics is highly recommended for everyone even during pandemic-free period, especially during the time of COVID-19.

In the healthcare industry, therefore, the VUI always offers an ideal solution, especially in combination with a graphical user interface to allow full contactless operation.

Ergomania is at the forefront of VUI development

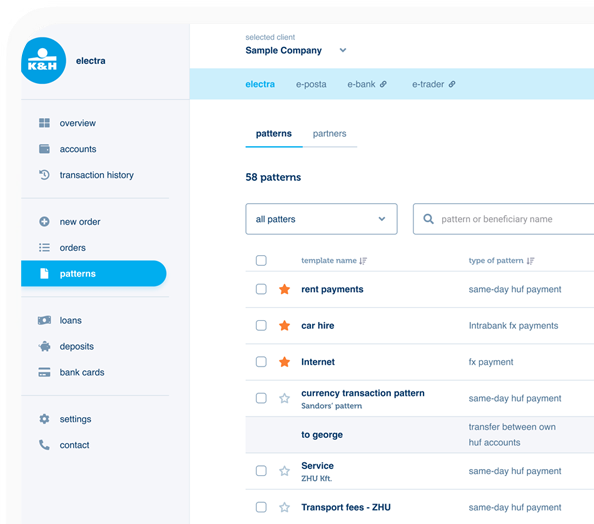

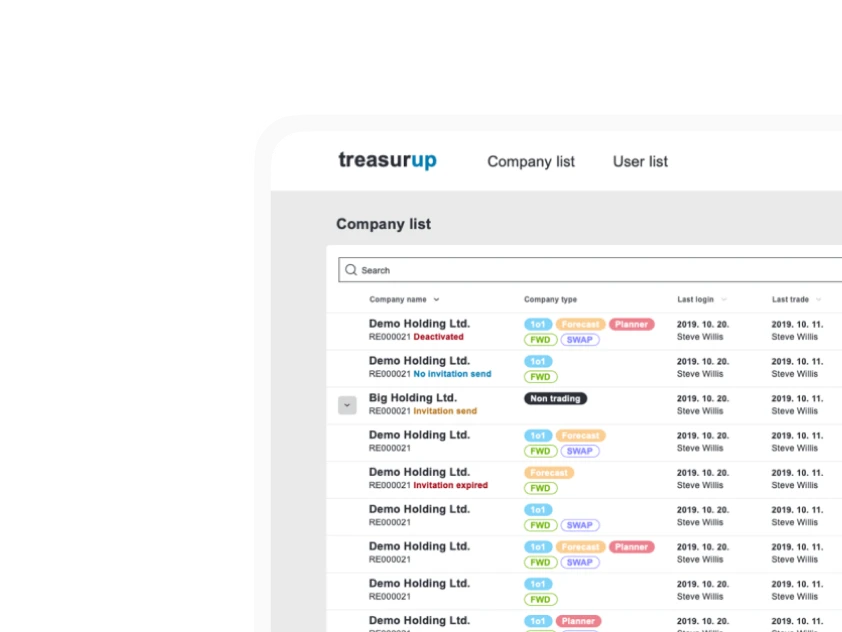

Ergomania’s approach has always been to make different user interfaces easy to use for everyone.

We design both GUI and VUI surfaces, even hybrid control panels tailored to the needs of our customers. All through the design process. we keep the interests of the users in the forefront.

When designing a voice-based interface, our main goal is to provide maximum UX and customer experience. In our experience, we did our work well when people like to use VUIs because they are simple, understandable, and easy to use, and because they don’t frustrate or confuse the users.

Through both our professional experience and our knowledge of the industry, we are at home in the design of enterprise intranets, industrial interfaces, and residential user interfaces.

We are confident in our belief that the future belongs to voice-based interfaces.

Are you ready for the future?

[What do OTP, Telenor and Joautok.hu have in common? Ergomania’s professional input helped all of them to become even more successful. Contact us!]